Live Lambda Development

SST features a local development environment that lets you debug and test your Lambda functions locally.

Overview

Live Lambda Development or Live Lambda is feature of SST that allows you to debug and test your Lambda functions locally, while being invoked remotely by resources in AWS. It works by proxying requests from your AWS account to your local machine.

info

If the runtime of the Lambda function is different from the one you've defined, it's because SST creates a stub Lambda function for sst dev.

Changes are automatically detected, built, and live reloaded in under 10 milliseconds. You can also use breakpoints to debug your functions live with your favorite IDE.

Quick start

To give it a try, create a new SST app by running npx create-sst@two. Once the app is created, install the dependencies.

To start the Live Lambda Development environment run:

npx sst dev

The first time you run this, it'll deploy your app to AWS. This can take a couple of minutes.

Behind the scenes

When this command is first run for a project, you will be prompted for a default stage name.

Look like you’re running sst for the first time in this directory.

Please enter a stage name you’d like to use locally.

Or hit enter to use the one based on your AWS credentials (spongebob):

It'll suggest that you use a stage name based on your AWS username. This value is stored in a .sst directory in the project root and should not be checked into source control.

A stage ensures that you are working in an environment that is separate from the other people on your team. Or from your production environment. It's meant to be unique.

The starter deploys a Lambda function with an API endpoint. You'll see something like this in your terminal.

Outputs:

ApiEndpoint: https://s8gecmmzxf.execute-api.us-east-1.amazonaws.com

If you head over to the endpoint, it'll invoke the Lambda function in packages/functions/src/lambda.js. You can try changing this file and hitting the endpoint again. You should see your changes reflected right away!

Before we look at how Live Lambda works behind the scenes, let's start with a little bit of background.

Background

Working on Lambda functions locally can be painful. You have to either:

Locally mock all the services that your Lambda function uses

Like API Gateway, SNS, SQS, etc. This is hard to do. If you are using a tool that mocks a specific service (like API Gateway), you won't be able to test a Lambda that's invoked by a different service (like SNS). On the other hand a service like LocalStack, that tries to mock a whole suite of services, is slow and the mocked services can be out of date.

Or, you'll need to deploy your changes to test them

Each deployment can take at least a minute. And repeatedly deploying to test a change really slows down the feedback loop.

How it works

To fix this, we created Live Lambda — a local development environment for Lambda functions. It works by proxying requests from Lambda functions to your local machine. This allows SST to run the local version of a function with the event, context, and credentials of the remote Lambda function.

SST uses AWS IoT over WebSocket to communicate between your local machine and the remote Lambda function. Every AWS account comes with a AWS IoT Core endpoint by default. You can publish events and subscribe to them.

Live Lambda v1

Prior to SST v2, Live Lambda was implemented using a WebSocket API and a DynamoDB table. These were deployed to your account as a separate stack in your app.

This new IoT approach is a lot faster, roughly 2-3x. And it does not require any additional infrastructure.

Here's how it works.

- When you run

sst dev, it deploys your app and replaces the Lambda functions with a stub version. - It also starts up a local WebSocket client and connects to your AWS accounts' IoT endpoint.

- Now, when a Lambda function in your app is invoked, it publishes an event, where the payload is the Lambda function request.

- Your local WebSocket client receives this event. It publishes an event acknowledging that it received the request.

- Next, it runs the local version of the function and publishes an event with the function response as the payload. The local version is run as a Node.js Worker.

- Finally, the stub Lambda function receives the event and responds with the payload.

Behind the scenes

The AWS IoT events are published using the format /sst/<app>/<stage>/<event>. Where app is the name of the app and stage is the stage sst dev is deployed to.

Each Lambda function invocation is made up of three events; the original request fired by the remote Lambda function, the local CLI acknowledging the request, and the response fired by the local CLI.

If the payload of the events are larger than 50kb, they get chunked into separate events.

So while the local Lambda function is executed, from the outside it looks like it was run in AWS. This approach has several advantages that we'll look at below.

Cost

AWS IoT that powers Live Lambda is completely serverless. So you don't get charged when it's not in use.

It's also pretty cheap. With a free tier of 500k events per month and roughly $1.00 per million for the next billion messages. You can check out the details here.

As a result this approach works great even when there are multiple developers on your team.

Privacy

All the data stays between your local machine and your AWS account. There are no 3rd party services that are used.

Live Lambda also supports connecting to AWS resources inside a VPC. We'll look at this below.

Languages

Live Lambda and setting breakpoints are supported in the following languages.

| Language | Live Lambda | Set breakpoints |

|---|---|---|

| JavaScript | ||

| TypeScript | ||

| Python | ||

| Golang | ||

| Java | ||

| C# | ||

| F# |

Supported regions

Live Lambda is supported in regions where AWS IoT is available. Here is a list of the currently supported regions.

Note that the supported regions is relevant only during dev mode (ie. sst dev). Your app can be deployed to any AWS region (ie. sst deploy).

For instance, if deploying to a region without AWS IoT support, like Europe Milan (eu-south-2), select an alternative region for sst dev, such as Europe London (eu-west-2).

Advantages

The Live Lambda approach has a couple of advantages.

- You can work on your Lambda functions locally and set breakpoints in VS Code.

- Interact with the entire infrastructure of your app as it has been deployed to AWS. This is really useful for testing webhooks because you have an endpoint that is not on localhost.

- Supports all Lambda triggers, so there's no need to mock API Gateway, SQS, SNS, etc.

- Supports real Lambda environment variables.

- Supports Lambda IAM permissions, so if a Lambda fails on AWS due to the lack of IAM permissions, it would fail locally as well.

- And it's fast! It's 50-100x faster than alternatives like SAM Accelerate or CDK Watch.

How Live Lambda is different

The other serverless frameworks have tried to address the problem of local development with Lambda functions. Let's look at how Live Lambda is different.

Serverless Offline

Serverless Framework has a plugin called Serverless Offline that developers use to work on their applications locally.

It emulates Lambda and API Gateway locally. Unfortunately, this doesn't work if your functions are triggered by other AWS services. So you'll need to create mock Lambda events.

SAM Accelerate

AWS SAM features SAM Accelerate to help with local development. It directly updates your Lambda functions without doing a full deployment of your app.

However, this is still too slow because it needs to bundle and upload your Lambda function code to AWS. It can take a few seconds. Live Lambda in comparison is 50-100x faster.

CDK Watch

AWS CDK has something called CDK Watch to speed up local development. It watches for file changes and updates your Lambda functions without having to do a full deployment.

However, this is too slow because it needs to bundle and upload your Lambda function code. It can take a few seconds. Live Lambda in comparison is 50-100x faster.

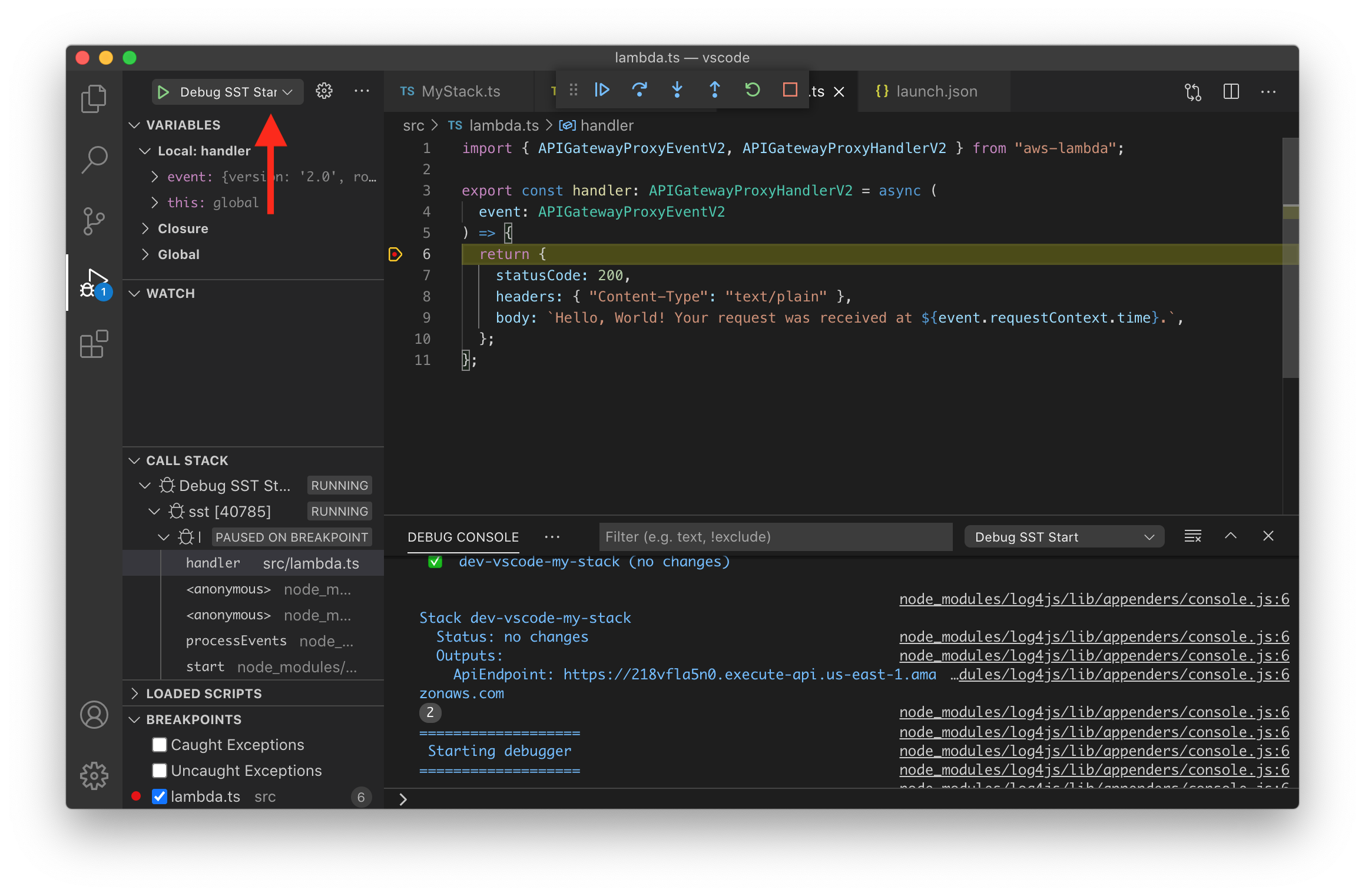

Debugging With VS Code

The Live Lambda Development environment runs a Node.js process locally. This allows you to use Visual Studio Code to debug your serverless apps live.

Let's look at how to set this up.

Launch configurations

Add the following to .vscode/launch.json.

{

"version": "0.2.0",

"configurations": [

{

"name": "Debug SST Dev",

"type": "node",

"request": "launch",

"runtimeExecutable": "${workspaceRoot}/node_modules/.bin/sst",

"runtimeArgs": ["dev", "--increase-timeout"],

"console": "integratedTerminal",

"skipFiles": ["<node_internals>/**"]

}

]

}

This contains the launch configuration to run the sst dev command in debug mode. Allowing you to set breakpoints to your Lambda functions. We also have an example project with a VS Code setup that you can use as a reference.

tip

If you are using one of our starters, you should already have a .vscode directory in your project root.

Debug Lambda functions

Next, head over to the Run And Debug tab and for the debug configuration select Debug SST Dev.

Now you can set a breakpoint and start your app by pressing F5 or by clicking Run > Start Debugging. Then triggering your Lambda function will cause VS Code to stop at your breakpoint.

Increasing timeouts

By default the timeout for a Lambda function might not be long enough for you to view the breakpoint info. So we need to increase this. We use the --increase-timeout option for the sst dev command in our launch.json.

"runtimeArgs": ["dev", "--increase-timeout"],

This increases our Lambda function timeouts to their maximum value of 15 minutes. For APIs the timeout cannot be increased more than 30 seconds. But you can continue debugging the Lambda function, even after the API request times out.

Debugging with WebStorm

You can also set breakpoints and debug your Lambda functions locally with WebStorm. Check out this tutorial for more details.

note

In some versions of WebStorm you might need to disable stepping through library scripts. You can do this by heading to Preferences > Build, Execution, Deployment > Debugger > Stepping > unchecking Do not step into library scripts.

Debugging with IntelliJ IDEA

If you are using IntelliJ IDEA, follow this tutorial to set breakpoints in your Lambda functions.

Built-in environment variables

SST sets the IS_LOCAL environment variable to true for functions running inside sst dev.

export async function main(event) {

const body = process.env.IS_LOCAL ? "Hello, Local!" : "Hello, World!";

return {

body,

statusCode: 200,

headers: { "Content-Type": "text/plain" },

};

}

Working with a VPC

If you have resources like RDS instances deployed inside a VPC, and you are not using the Data API to talk to the database, you have the following options.

Connect to a VPC

By default your local Lambda function cannot connect to the database in a VPC. You need to:

- Setup a VPN connection from your local machine to your VPC network. You can use the AWS Client VPN service to set it up. Follow the Mutual authentication section in this doc to setup the certificates and import them into your Amazon Certificate Manager.

- Then create a Client VPC Endpoint, and associate it with your VPC.

- And, finally install Tunnelblick locally to establish the VPN connection.

Note that, the AWS Client VPC service is billed on an hourly basis but it's fairly inexpensive. Read more on the pricing here.

Connect to a local DB

Alternatively, you can run the database server locally (ie. MySQL or PostgreSQL). And in your function code, you can connect to a local server if IS_LOCAL is set:

const dbHost = process.env.IS_LOCAL

? "localhost"

: "amazon-string.rds.amazonaws.com";

Infrastructure changes

In addition to the changes made to your Lambda functions, sst dev also watches for infrastructure changes and will automatically redeploy them.